Software Development

How to Create a Web Crawler in Python: A Step-by-Step Guide

Are you interested in learning how to make a web crawler in Python?

Look no further! In this article, we will guide you through the process of creating your very own web crawler using the power and simplicity of Python. Whether you’re a beginner or an experienced programmer, our step-by-step instructions and code examples will help you get started quickly. So let’s dive right in and discover how to harness the potential of Python for web crawling!

Web crawling is an essential technique used to extract data from websites automatically. With Python’s user-friendly syntax and rich library ecosystem, it has become a popular choice for building web crawlers. In this tutorial, we’ll walk you through the fundamental concepts behind web crawling and show you how to implement a basic web crawler using Python. By following along with our code examples, you’ll gain valuable insights into handling HTTP requests, parsing HTML content, navigating website structures, and storing extracted data. Whether your goal is gathering information for research purposes or automating repetitive tasks like scraping product prices or monitoring website changes, this article will equip you with the necessary skills to build your own powerful web crawler in Python.

Remember: The key to successful programming lies not just in understanding the code but also grasping core concepts behind it. Let’s embark on this exciting journey together as we demystify the process of making a web crawler using everyone’s favorite language – Python!

What is a Web Crawler?

A web crawler, also known as a spider or a bot, is an automated program that systematically explores and indexes the vast amount of information available on the internet. It navigates through websites by following links and collecting data from each page it visits.

Web crawlers are essential for search engines like Google, Bing, and Yahoo to index web pages effectively. They help search engines build their databases and provide users with relevant search results.

Here are some key points about web crawlers:

- Purpose: The primary purpose of a web crawler is to gather information from websites in an organized manner. This collected data includes text content, images, URLs, metadata, and more.

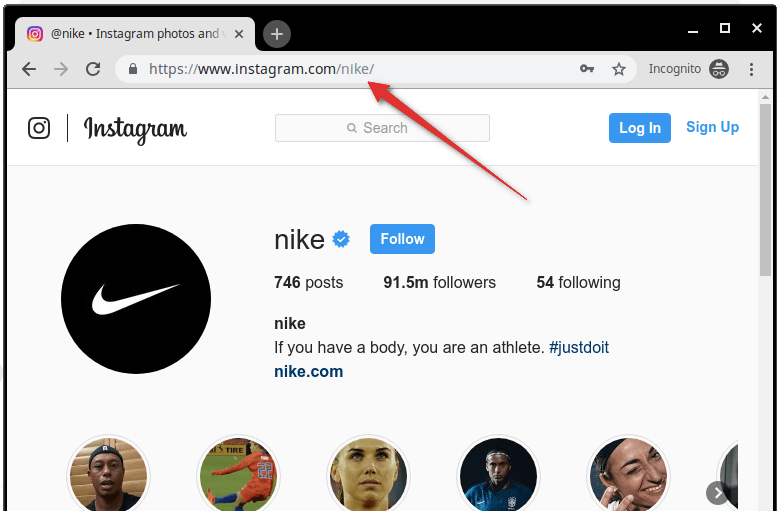

- Traversal: A crawler starts its journey from a seed URL provided by the programmer or search engine algorithm. It then follows hyperlinks found on each visited page to discover new URLs to crawl.

- Indexing: As the crawler visits various pages, it extracts relevant information and stores it in a database for further processing. Search engines use this indexed data to respond quickly when users make queries.

- Robots.txt: Websites can control access by specifying rules in robots.txt files placed in their root directories. Crawlers read these files before crawling the site to respect any restrictions set by website owners.

- Respectful Crawling: To avoid overwhelming servers with excessive requests and causing disruptions or penalties, responsible crawlers follow polite crawling practices such as obeying crawl delays specified in robots.txt files.

- Crawl Budget: Search engines allocate resources based on a website’s importance during crawling sessions; this allocation is called “crawl budget.” Popular sites receive more frequent crawls compared to less popular ones.

In summary, web crawlers play a vital role in cataloging immense amounts of online information efficiently for search engine indexing purposes.

Choosing the Right Python Libraries

When it comes to building a web crawler in Python, selecting the right libraries is crucial. These libraries provide valuable functionality and simplify the development process. Here are some essential Python libraries that can help you create an efficient web crawler:

- Requests: This library allows you to send HTTP requests easily, making it ideal for retrieving web pages while handling redirects and cookies.

- Beautiful Soup: With Beautiful Soup, parsing HTML and XML documents becomes a breeze. It provides convenient methods for navigating through document structures, extracting data, and handling malformed markup.

- Scrapy: If you’re looking for a powerful web scraping framework, Scrapy should be on your radar. It offers advanced features like automatic throttling, concurrent requests handling, and built-in support for XPath selectors.

- Selenium: Selenium enables browser automation by controlling web browsers programmatically. This library is useful when dealing with dynamically generated content or interacting with JavaScript-heavy websites.

- PyQuery: PyQuery is inspired by jQuery’s syntax and allows you to perform CSS-style queries on parsed HTML documents effortlessly.

- URLLib: The urllib module provides various functions that assist in working with URLs: fetching data from URLs using different protocols (HTTP/FTP), URL encoding/decoding utilities, etc.

- lxml: lxml combines speed and simplicity when it comes to processing XML and HTML files efficiently.

Remember that each library serves a specific purpose in the web crawling process; choose them wisely based on your project requirements.

Understanding HTML Parsing

HTML parsing is an essential step in building a web crawler using Python. It allows you to extract relevant information from the HTML code of a webpage. Here are some key points to understand about HTML parsing:

- What is HTML Parsing?

- HTML parsing refers to the process of analyzing an HTML document and extracting meaningful data or elements from it.

- Python provides several libraries, such as BeautifulSoup and lxml, that make it easy to parse and navigate through HTML documents.

- Why Do We Need HTML Parsing?

- Webpages are structured using HTML tags, which enclose different types of content like headings, paragraphs, links, images, etc.

- By parsing the HTML code of a webpage, we can locate specific elements or extract desired data for our web crawler’s purposes.

- Parsing with BeautifulSoup:

- BeautifulSoup is a popular Python library that simplifies the task of parsing and navigating through an HTML document.pythonDownloadCopy code

1# Installation: 2pip install beautifulsoup4 3 4# Importing: 5from bs4 import BeautifulSoup

- BeautifulSoup is a popular Python library that simplifies the task of parsing and navigating through an HTML document.pythonDownloadCopy code

- Navigating Through Elements:

- Once parsed with BeautifulSoup, we can use various methods (e.g.,

.find(),.find_all()) to locate specific elements based on their tag names or attributes.

- Once parsed with BeautifulSoup, we can use various methods (e.g.,

- Extracting Data:

- After locating an element(s), we can access its content by utilizing different methods provided by BeautifulSoup (e.g.,

.text,.get()).

- After locating an element(s), we can access its content by utilizing different methods provided by BeautifulSoup (e.g.,

- Handling Nested Elements:

- When dealing with nested elements within an element hierarchy (e.g., div inside div), we need to traverse the structure accordingly.

- Dealing With Errors:

- Sometimes webpages may have malformed or inconsistent markup that may cause errors during parsing; hence handling exceptions becomes crucial.

Understanding how to parse HTML using libraries like BeautifulSoup is a fundamental skill for building effective web crawlers in Python. By extracting relevant information from the HTML code, you can collect data for further analysis or processing.

Implementing Crawling Logic

import scrapy

class spider1(scrapy.Spider):

name = ‘Wikipedia’

start_urls = [‘https://en.wikipedia.org/wiki/Battery_(electricity)’]

def parse(self, response):

passTo create a web crawler in Python, you need to implement the crawling logic. This involves defining the steps that your crawler will take to navigate through websites and collect data. Here’s how you can do it:

- Choose a starting point: Determine the initial URL from where your crawler will begin its journey. You can start with a single website or provide a list of URLs.

- Send HTTP requests: Use the

requestslibrary in Python to send HTTP GET requests to each URL you want to crawl. This allows you to retrieve the HTML content of web pages. - Parse HTML responses: Utilize an HTML parsing library like

BeautifulSouporlxmlto extract relevant information from the HTML response received for each request. Parse elements such as links, titles, images, and other data according to your requirements. - Store crawled data: Decide on how you want to store the crawled data for further processing or analysis purposes. You could use databases like MySQL or MongoDB, CSV files, or any other suitable storage format.

- Manage visited URLs: Keep track of visited URLs using a set or database table (if needed) to avoid revisiting them during subsequent crawls.

- Extract new URLs: Extract all unique URLs from within each page’s content and add them to a queue for future crawling tasks.

- Limit crawling scope (optional): To prevent infinite crawling loops and control resource usage, consider implementing mechanisms such as maximum depth limits, domain restriction filters, or inclusion/exclusion rules based on URL patterns.

- Implement politeness policy (optional): To be respectful towards website owners and minimize potential impact on their servers’ performance, introduce delays between successive requests by adding sleep timers before sending out new ones.

By following these steps while coding your web crawler in Python, you’ll have an effective system for crawling websites and gathering the desired data. Remember to handle potential exceptions, timeouts, and other error scenarios gracefully to ensure robustness in your implementation.

Note: It’s important to be mindful of web scraping ethics and legal considerations while implementing a web crawler. Make sure you comply with website terms of service, respect robots.txt directives, avoid excessive requests that may cause disruptions or violate policies, and focus on collecting publicly available information responsibly.

Happy crawling!

Handling Data Extraction

When building a web crawler in Python, data extraction is a crucial step. It involves extracting relevant information from the crawled web pages. In this section, we will explore various techniques and libraries for handling data extraction efficiently.

Here are some methods you can use to extract data from web pages:

- Regular Expressions (Regex): Regex provides a powerful way to search and extract patterns from strings. You can define specific patterns using regex to match and capture the desired data within HTML or text content.

- BeautifulSoup: BeautifulSoup is a popular Python library that enables easy parsing of HTML and XML documents. It offers intuitive methods to navigate through the document structure and extract relevant data by targeting specific tags, classes, or attributes.

- XPath: XPath allows you to traverse XML or HTML documents using path expressions similar to file paths in operating systems. The lxml library in Python provides robust support for XPath queries, making it an efficient choice for complex data extraction tasks.

- Scrapy: Scrapy is a powerful framework specifically designed for web scraping purposes. It simplifies the process of extracting structured data from websites by offering built-in features like automatic request handling, spider management, and item pipelines.

- APIs: Some websites provide APIs (Application Programming Interfaces) that allow developers to access their data programmatically rather than scraping it directly from their website’s HTML structure.

- Data Cleaning Libraries: After extracting raw data, cleaning may be required before further analysis or storage into databases. Libraries such as Pandas offer functions for filtering out noise or irrelevant elements while processing large datasets efficiently.

Remember these best practices when handling data extraction:

- Inspect page source code: Before writing your code, examine the target webpage’s source code carefully; this helps identify appropriate tags or patterns necessary for accurate extraction.

- Handle exceptions gracefully: Ensure your code has proper error-handling mechanisms in case of unexpected data or network issues.

- Respect website policies: Always review the website’s terms of service and robots.txt file to avoid violating any rules or scraping limitations.

By implementing these techniques and following best practices, you can effectively extract valuable data from web pages using your Python web crawler.

Conclusion

In conclusion, building a web crawler in Python can be an exciting and rewarding endeavor. By following the steps outlined in this article, you now have the knowledge and tools to create your very own web crawling application.

Throughout this guide, we covered various aspects of web scraping using Python libraries such as BeautifulSoup and requests. We learned how to send HTTP requests, extract data from HTML pages, and navigate through different elements of a website.

Remember that when developing a web crawler, it is essential to respect website policies by setting appropriate crawl delays and user-agent headers. Additionally, ensure that your crawler adheres to ethical guidelines and legal requirements.

By harnessing the power of Python’s extensive libraries for web scraping, you are equipped with the ability to gather valuable data from websites efficiently. With practice and experimentation, you can further enhance your skills in creating more advanced crawlers tailored to meet specific needs or requirements.

So go ahead and embark on your journey into the world of web crawling with Python—the possibilities are endless! Happy coding!

-

Manage Your Business1 day ago

Manage Your Business1 day agoTOP 10 VoIP providers for Small Business in 2024

-

Cyber Risk Management5 days ago

Cyber Risk Management5 days agoHow Much Does a Hosting Server Cost Per User for an App?

-

Outsourcing Development5 days ago

Outsourcing Development5 days agoAll you need to know about Offshore Staff Augmentation

-

Software Development5 days ago

Software Development5 days agoThings to consider before starting a Retail Software Development

-

Edtech1 day ago

How to fix PII_EMAIL_788859F71F6238F53EA2 Error

-

Grow Your Business5 days ago

Grow Your Business5 days agoThe Average Size of Home Office: A Perfect Workspace

-

Solution Review5 days ago

Top 10 Best Fake ID Websites [OnlyFake?]

-

Business Imprint5 days ago

How Gaming Technologies are Transforming the Entertainment Industry