MLOps is a special discipline linking machine learning and operations. It focuses on simplifying the machine learning cycle, from development to deployment and maintenance. MLOps orchestrates...

Choosing the right property management system (PMS) for your business requires careful consideration of various factors. It’s not just about selecting software with a wide range...

Every company wants a quick, flexible, and effective application, which raises several issues regarding whether such flawless software exists. A sizable developer community maintains Node.JS, an...

When it comes to selecting a database management system, you are provided with an abundance of choices, and one of the best choices is PostgreSQL. PostgreSQL...

Understanding AI Engineer hiring process Comprehending AI Staff Augmentation Services requires knowledge of its unique characteristics. Let’s explore some key features that differentiate from traditional staffing...

Searching for the ideal NDA software dev template for your project? Look no more! This blog will help you make an informed decision. We’ll cover the...

Introducing Svelte 3, the innovative component framework for building web applications! Much like React or Vue.js, Svelte is a tool for developers to construct robust web...

As a programming language, Python has gained significant popularity over the years. Python’s extensive standard library and ease of learning make it one of the most...

This blog will help us to know about how an Artificial Intelligence helping us in many ways.

Ukraine is among the countries on the way to being the top software development destination for countries in Europe. The IT industry generally grows due to...

Nowadays, there are a lot of source code hosting services to choose from — all having their pros and cons. The challenge, however, is to pick...

Worried about the costs for your data transformations and processes using AWS Glue? Get the scoop on the pricing of AWS Glue. Let this article guide...

Struggling with the ChatGPT? Don’t worry! We have your back. This article will guide you on how to make an app with the ChatGPT (OpenAI API),...

Hire ERP Developers with Expertise in Various ERP Systems An ERP system is a business software that integrates all facets of a business, which are operational,...

Rent-to-Own Dedicated Server as a flexible and cost-effective solution for companies that require a dedicated server but want to avoid the upfront capital expense of purchasing...

Searching for the ideal way to guarantee your software dev process is current and proficient? In the ever-changing software industry, continuous integration tools are imperative for...

After the COVID-19 pandemic, outsourcing software development projects have gained momentum to a great extent. I mean why not since here you can receive desired outcomes...

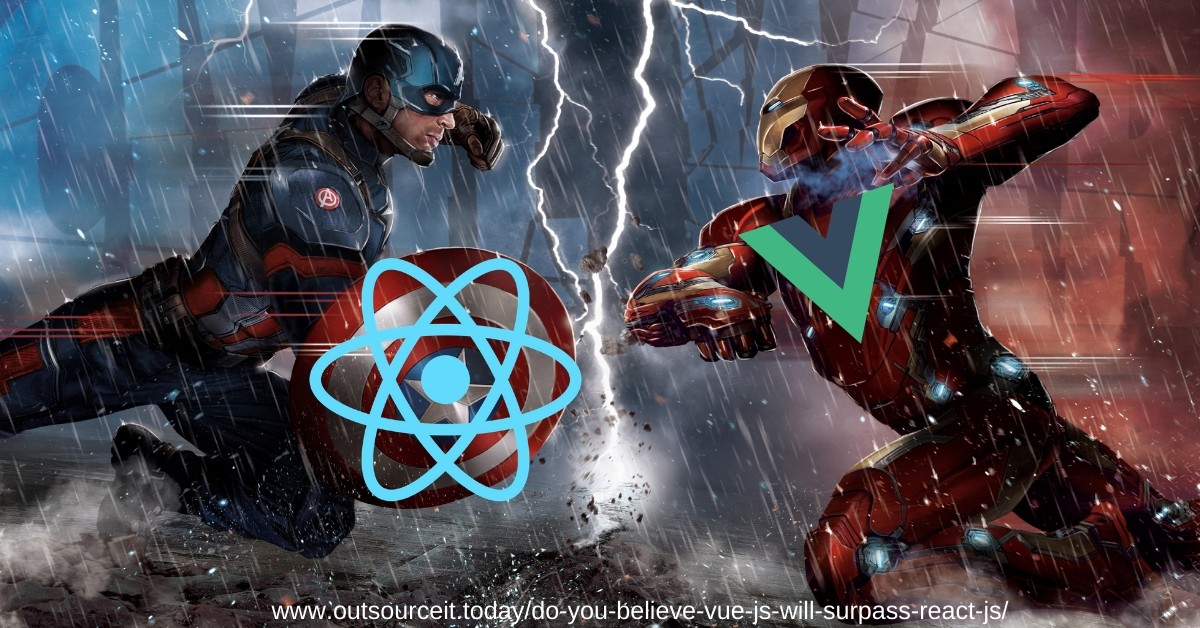

It’s difficult to predict the future of web development frameworks, but it’s unlikely that Vue will completely overtake React in popularity. React has been widely adopted...

Note: This is one of the articles I prepared without pictures. Because in fact, the only correct picture in the development topic is a paid invoice....

Are you searching for a budget-friendly way to boost your tech team? US tech enterprises are now aiming for Latin American nations to employ remote, home-based...

Organizations of all sizes and industries now have access to ever-increasing amounts of data, far too vast for any human to comprehend. All this information is...

Hello and welcome to my PyTorch development and consulting services blog! If you’re looking for help with PyTorch development or consulting, then you’ve come to the...

Welcome to my blog, where I discuss the latest developments in chatbot technology and its application to coding! Whether you’re a beginner or an experienced developer,...

Corporate finance is an important part of any business, and the software used can make a big difference in how successful a company is. There are...

What framework has better performance, Flask or Django? Both Flask and Django are powerful web frameworks that are widely used and have their own unique advantages....

“Green software” refers to software programs that are designed and developed with environmental sustainability in mind. These programs are intended to minimize their impact on the...

Hey there! I wanted to share a little story with you about my experience with Python. I’ll admit right off the bat that I’m not a...

Thinking about creating your project with Java? Find out what are Java developer job duties, roles, and responsibilities according to the job description first. This article...

No-code backend software or Backend as a Service provides an instant platform for developers to store and manage data, creating an efficient connection between frontend applications...

Cryptocurrency has various advantages over conventional digital payment systems. Crypto dealings usually have low processing fees, and crypto enables the ability to avoid chargebacks. It has...

The International Electrotechnical Commission (IEC) does not have a set of standards specifically created for the security of the Internet of Things (IoT). However, it has...

In this article, we'll explore Python venture thoughts from fledglings to cutting edge levels with the goal that you can achieve without much of a stretch...

Welcome to our blog about PHP vulnerabilities! In here, you will learn all about injection attacks, exploits, scanners, flaws and security issues related to the powerful...

Electron works more like the Node.js runtime. If you’re a web developer, you’ve probably heard of Node.js. It’s a popular JavaScript runtime that allows you to...

Tech progress continues to destroy borders between the virtual and real worlds. And blockchain is one of the core techs behind this process. Paying in crypto...

When it comes to developing a new solution, creating it as a minimum viable product is a sound idea. It allows startups to spend less time...

Blockchain is a relative niche among developers, so you need to know where to go to find competent and experienced blockchain engineers. The average cost to...

A Product Roadmap is a valuable artifact that outlines a company’s direction, product vision, priorities, and product development progress. A roadmap is a blueprint for how...

Scope creep is a very real phenomenon in the world of software development, and it can have disastrous consequences if left unchecked. In this blog post,...

The Minimum Marketable Product, or MMP, is the bare minimum product or service that you need to create in order to test the market and validate...

Blockchain technology continues to evolve and the potential of smart contracts is increasing. Smart contracts are self-executing and can enforce the terms of an agreement without...

A model is a simplified representation of a real-world system. In machine learning, we create models to make predictions about data. But how do these models...

Without a doubt, JavaScript popularity has reached a new high following the release of the all-new Vue.js, an improved version of Node.js, and much more. The...

Outsourcing the development of your Python application can help you save time and money. There are many benefits associated with outsourcing, including: Ease of access to...

End-To-End software product development is the process of taking a software product from idea inception to final delivery. It includes everything from idea generation and market...

Git HEAD is a reference to the last commit in the current checked out branch. It can be abbreviated as HEAD. A Git commit history usually...

If you’re looking for a detailed explanation of total cost of ownership in software, you’ve come to the right place. In this blog post, we’ll explore...

The COVID-19 pandemic has affected how things get done, and it’s not business as usual anymore. However, it is increasingly necessary for companies to survive the...

Loyal customers are the life force of any successful business, which is why for any business to rise high and win appreciation, it’s important to carefully...

The Book of Secret Knowledge ⭐ 78.5k A collection of inspiring lists, manuals, cheat sheets, blogs, hacks, one-liners, cli/web tools, and more. Coding Interview University ⭐...