App Development

Tangled Up in Tools

What’s Wrong with Libraries, and What to Do about It

by Mike Taylor

Surely “Just learn these 57 classes” is not the re-use we were promised?

Whatever Happened?

A few weeks ago on my blog, The Reinvigorated Programmer, I wrote an entry entitled “Whatever happened to programming?” that resonated deeply with a lot of people, judging by the many comments. Rather than respond individually to the initial batch of comments, I wrote a followup article, which in turn generated a lot more comments—on Hacker News, Reddit and Slashdot, as well as on the blog itself. Those comments have thrown up many more issues, and it’s taking time for me to digest them all. I think I am groping towards some kind of conclusion, though. In this article, I’ll briefly review the substance of those two entries, and speculate on possible ways forward.

The original article was born out of frustration at the way modern programming work seems to be going—the trend away from actually making things and towards just duct-taping together things that other people have already made. I’ve been feeling this in a vague way for some time, but the feeling was crystalized by Don Knuth’s observations in a long interview for Peter Siebel’s book Coders at Work. Knuth said:

The way a lot of programming goes today isn’t any fun because it’s just plugging in magic incantations—combine somebody else’s software and start it up. It doesn’t have much creativity. I’m worried that it’s becoming too boring because you don’t have a chance to do anything much new. Your kick comes out of seeing fun results coming out of the machine, but not the kind of kick that I always got by creating something new. The kick now is after you’ve done your boring work then all of a sudden you get a great image. But the work didn’t used to be boring. (page 594)

It’s evident that a lot of people feel the same way. It’s not that we want to have to write out own sort and search routines every time we need to do that stuff—everyone recognizes that that would be a waste of time better spent on solving higher-level problems. But when I’m working on a modern web application, I want to bring the same kind of creativity to bear that I used to need back in the day when we wrote out own quicksorts, schedulers, little-language parsers, and so on. I don’t want my job to be just plugging together components that other people have already written (especially when the plugging process is as lumpen and error-prone as it often is). I want to make something.

Scientists vs. Engineers

Responses to the “Whatever happened?” articles seemed to be split roughly 50–50 between scientists and engineers. Scientists said, “Yes, yes, I know exactly what you mean!”; engineers said “This is the re-use we’ve all been trying to achieve for the last forty years.” Part of me knows that the engineers are right, or at least partly right; but part of me feels that surely this can’t be the shiny digital future we were all looking forward to. Does re-use really have to be so, well, icky? Surely “Just learn these 57 classes” is not the re-use we were promised?

But the truth is that, like most of us, I wear both scientist and engineer hats at different times. What I want with one hat on might not be what I want with the other hat on. The are plenty of things that both hats agree on—the desirability of simplicity, clarity and generality, for example, the three Magic Words on the cover of Kernighan and Pike’s book The Practice of Programming. But the hats have different ideas on how to get there. The science hat cares deeply about understanding. No, it’s more than that: it cares about deeply understanding. The engineering hat cares about getting stuff done. What I am still trying to figure out is this: is it quicker just to Get Stuff Done without bothering to understand, or is time invested in understanding worthwhile even when measured in a purely utilitarian way? I honestly don’t know.

That Was Then, This Is Now

It didn’t used to be this way. I’ve been programming computers since 1980: Commodore BASIC, then C, then Perl, and most recently Ruby. Along the way I’ve used a lot of other languages, but those have been the four that, at some time or another, have been my favorite—my language of choice. For a few years back in the mists of time, all my thoughts had line numbers. In the 1990s, any time I wanted to write a program, my fingers would automatically type int main(int argc, char *argv[]) as soon as my hindbrain detected a fresh emacs buffer. I spent a decade of my life believing that the distinction between scalar and list context made sense, and that wantarray() was the kind of thing that a rational language might include. I’m better now, but it sometimes seems odd to me that physical objects in meatspace don’t know how to respond to an each message with a closure attached.

In the last 30 years, I’ve seen a lot of changes in how programming is done, but perhaps the key one is the rise of engineering. On a Commodore 64 you had to be scientist to get anything done. You had to know the machine deeply, you had to grok its inmost core, to become one with its operational parameters, to resonate with the subtle frequencies of its most profound depths—or, at least, know what location to POKE to turn the border purple.

Much the same was true when programming in C on Unix: you still needed to know the machine, even if now it was a slightly more abstract machine. But there was an important change—in retrospect, even more important than the change from unstructured spaghetti code to block-structured, or from interpreted to compiled: there was a library. Yes, a library—just one. (Well, roughly one; I am simplifying a bit, because there was also curses, termcap and a few other little ones.) The library provided simple ways to do complex things like sorting and formatted output, as well as system-level operations like setting up pipes and forking processes. It need hardly be said that everyone agreed the library was A Good Thing—a huge time-saver. So we all learned the library: good C programmers knew the whole thing, including stuff like the difference in the order of arguments between write() and fwrite(), the signature of a sort() comparison-function, and when to use strcpy() vs. memcpy(). It gave as a whole new palette to paint with.

And then, wow, Perl. Perl doesn’t offer a library. Perl has what is probably the biggest catalogue of libraries of any language in the world: CPAN (the Comprehensive Perl Archive Network. At the time of writing, CPAN offers 19941 distributions for download, of which 500 pertain to XML alone. It’s overwhelming. In the last dozen years, at least three biographies have been written with the title The Last Man Who Knew Everything (about Athanasius Kircher, Thomas Young, and Joseph Leidy). It’s exhilarating to think of a time when it was possible for a single individual to master all the accumulated knowledge of mankind; now it’s not possible to master all the open-source libraries for Perl. There’s just so darned much of everything.

Library Blindness

So what is a boy to do? Choose libraries carefully, learn only a few, and hope that you picked the right ones. For historical reasons, I do my XML handling in Perl using the clumsily named XML::LibXML library, and I have no real idea whether it’s the best one for the job. It’s the easiest one for me to use, because all I have to do is find some code where I used it before, cut and paste the instantiation/invocation, and tweak to fit the current case. But while my engineer hat is happy enough with that, my scientist hat is deeply unhappy that I don’t understand what I am doing. Not really.

Of course the XML-in-Perl problem would not be that hard to solve—I’d just need to take some time to surf around a bit, see which XML libraries other people are using and what they say about them, figure out how “alive” the code is (are there recent releases, and how frequent are they?), download and install them, try them out, and see which I like best. If XML were the only area where Library Blindness were an issue, I would probably just shut up whining and do it. But the same applies in a hundred other areas, and I am too busy chopping down trees to stop and sharpen my axe.

Am I saying that there shouldn’t be any libraries for Perl? Heck, no—I don’t want to do my own XML parsing! What then? That there should be One True Library, like there was for C in the 1980s, and someone trustworthy should decide what’s in and what’s out? No, that could never work. I am, in truth, not sure what I am saying: I don’t know the solution. I can’t even propose one, beyond the obvious observation that better reputation management mechanisms would make it less daunting to choose one library from among many that compete in the same space. We need libraries, and lots of them, for the simple reason that we are expected to do much more now than we were back when we had Commodore 64s (or, heaven help us, VIC-20s). The small, self-contained programs that we wrote then didn’t need libraries because they didn’t make REST calls to web services, or map objects onto relational databases, or transform XML documents. (Perhaps we could have used graphics libraries; but since the hardware didn’t really support arbitrary plotting, most graphics was done with user-defined characters and, if you were lucky, sprites.)

So libraries are necessary. Are they a necessary evil? Only if you think that the evilness is a necessity. I don’t think it is; but experimental evidence is against me.

The Library Lie

A commenter called Silversmith in the Reddit discussion of my original article had an idealized view of libraries: “You have problem X, consisting of sub-problems X1, X2, X3. There are readily available solutions for X1 and X3. If you don’t feel like being a bricklayer, code X. I choose to code X2, plug in solutions X1 and X3, and spend the rest of the day investigating and possibly solving problem Y.” That is what I call the library lie, and I think that Silversmith has swallowed it. The unspoken assumption is that “plug in solutions X1 and X3” is trivial—that it takes little effort and close to no time, and that the result is a nice, clean, integrated X. But we know from experience that this isn’t true. The XML that X1 produces is supposedly in the same format as X3 requires, but it’s mysteriously rejected when you feed it to X3 (and there is of course no useful error message—just “XML error”). You provide two hook functions for X3 to call back, but one never gets called and the other seems to be called twice… sometimes, under conditions that aren’t clear. And so it goes, and so you find yourself writing not just X2 but also wrapper layers X1’ and X3’ that are supposed to make X1 and X3 look like you want them to. And even when you’re done you’re not really clear in your mind why the aggregate works for the cases you’ve tested; and you have no confidence that something won’t go mysteriously wrong when you start using it in other cases.

I’m not saying that building X1’ and X3’ and dealing with all the pain isn’t still quicker overall than writing your own X1 and X3. But I am saying that we need at least to be honest with ourselves about how long “just plug in solutions X1 and X3” is going to take; and we need to recognize that the end result might not be as tractable or reliable as if we’d built the whole solution.

Libraries are a win. But they are not as big a win as they want you to think, and sometimes they are the kind of win that make you wish you’d lost.

I’ll See Your Library Blindness and Raise You Framework Fever

If there is one thing more frightening that mapping your way through a maze of twisty little libraries, all different, it’s getting mired in the framework swamp. “Framework” is a word that’s leaped to prominence in the last few years—you rarely heard it before the turn of the millennium, outside Java circles at least, but now they’re the hot game to be playing. Sometimes the word seems to be merely a fashionable synonym for library, but the framework proper is a bigger and hairier beast.

A framework can be defined as a set of libraries that say “Don’t call us, we’ll call you.” When you invoke a traditional library, you are still in control: you make the library calls that you want to make, and deal with the consequences. A framework inverts the flow of control: you hand over to it, and wait for it to invoke the various callback functions that you provide. You put your program’s life in its hands. That has consequences: one of the most important ones is that, while your program can use as many libraries as it likes, it can only use—or, rather, be used by—one framework. Frameworks are jealous. They don’t share.

Is it worth ceding control to a framework? Well, sometimes. My experience has been that so long as you do things the framework’s way, and don’t need to go beyond what the author imagined, you’ll do fine—things will work quickly and simply and the sun will shine and birds will sing until suddenly—uh-oh!—you need to do something just a tiny bit differently and wham! everything falls apart. Subtle dependencies that were hidden from you when it was all going right suddenly leap out of the bushes and yell at you. The monsters that were chained in the basement all get loose at once, and suddenly you add this line to your callback and it makes that seemingly unrelated thing go wrong. And so you patch for that and something completely separate goes wrong at the other end of your application, and the log-file doesn’t tell you anything useful. So you try to look up the problem area in the documentation, but when you find the entry for the function newRecordAddedHook, it just says “This hook is called when a new record is added.”

There’s a scary example of framework failure right in the Rails book, Agile Web Development with Rails (Third Edition). The tutorial has been going swimmingly for 187 pages, teaching you how to do things like add has_many :line_items to the Product model class: minimal code, all self-explanatory. Then suddenly, out of nowhere, the Internationalization chapter tells you to simply add a form to the store layout:

<% form_tag '', method => 'GET', :class => 'locale' do &>

<%= select_tag 'locale', options_for_select(LANGUAGES, I18n_locale),

:onchange => 'this.form.submit()' %>

<%= submit_tag 'submit' %>

<%= javascript_tag “$$('.locale input').each(Element.hide)” %>I mean to say, what?

The form tag, select tag and submit tag make sense in light of what has gone before, but that hunk of JavaScript appears in the text fully formed, direct from the mind of Zeus. It’s great that it appears right there in the tutorial, but there is no way I would ever have arrived at that for myself.

When I run into this kind of thing, I feel like I am playing 1980s Adventure games all over again. You remember: OPEN DOOR / The door is locked / UNLOCK DOOR / Unlock the door with what? / UNLOCK DOOR WITH KEY / Do you mean the iron key or the brass key? / UNLOCK DOOR WITH BRASS KEY / The brass key does not fit. It’s guesswork. Worse than that, it’s guessing uninteresting details like vocabulary rather than guessing about the actual problem. When I played Lurking Horror, I had terrible trouble with a puzzle near the very end, when it was obvious that something was in a pool of water and I had to get it out. I couldn’t ENTER POOL or SWIM IN POOL, and attempts to PUT HAND IN POOL or FEEL POOL or FEEL IN POOL were all fruitless. In the end I hit on the answer, REACH INTO POOL. This sort of guess-what-the-author-had-in-mind game is dispiriting enough when you’re trying to defeat a gothic horror from outside of time and save the world from the evil dominion of cultic beasts; but it’s just plain dumb when all you’re trying to do is display a dropdown.

You Are Now the Underclass

My blood ran cold yesterday when I read these comments in an interview with the Gang Of Four on the subject of Design Patterns 15 Years Later:

Richard Helm: I think there has been an evolution in level of sophistication. Reusable software has migrated to the underlying system/language as toolkits or frameworks—and mostly should be left to the experts.

Ralph Johnson: Most programmers are not hired to write reusable software […] Perhaps it would be better now to aim the book at people using patterns chosen by others rather than aim it at people trying to figure out which pattern to use.

There it is, folks, in black and white: according to the experts, all us working Joes should just be using frameworks… which will be written by: the experts.

You know what? I don’t trust them to get it right. I mean, why would they start now?

The Problem Is Not Libraries. The Problem Is Bad Libraries

I’ve painted a black picture. I recognize the need these days to write programs that do much more than they did back in the 1980s; I’ve admitted that we can’t do that without libraries; I may not have come right out and said that frameworks are necessary, too, but deep in the black recesses of my heart I know it’s true (otherwise why would I be bothering to read the Rails book?) It’s funny to remember my lecturers, back when I was doing my maths-and-CS degree in the late 1980s, talking about the “software crisis” – they really had no idea how bad it was going to get. Dijkstra’s words from The Humble Programmer seem prescient: “as long as there were no machines, programming was no problem at all […] now we have gigantic computers, programming has become an equally gigantic problem.” It’s hard to believe that was written in 1972.

So I seem to be whining quite a lot. Do I have any constructive suggestions?

Why, yes! Yes, I do. I have a two-prong manifesto.

Prong 1: Simpler, Clearer, Shorter Documentation

The simplest thing that we can do right now is to rethink how we document libraries. Automatic documentation tools like Javadoc and Rdoc are good for producing thick stacks of paper, but not so hot at actually telling library users the things they need to know. They are the source of most of the world’s “newRecordAddedHook is called when a new record is added” documentation. They can be useful, but they are no substitute for actually writing about the library: single-page summaries that answer the three key questions: what the library does, why you should use it, and how to do so. For bonus points, the one-page summary should avoid using the word “enterprise” (unless it’s a library of Star Trek ships) and “innovative.”

Here’s an example of the kind of thing we need to get away from: I quote verbatim from the first paragraph of content on the front page of the primary web site about JavaServer Faces:

Developed through the Java Community Process under JSR – 314, JavaServer Faces technology establishes the standard for building server-side user interfaces. With the contributions of the expert group, the JavaServer Faces APIs are being designed so that they can be leveraged by tools that will make web application development even easier. Several respected tools vendors were members of the JSR-314 expert group, which developed the JavaServer Faces 1.0 specification. These vendors are committed to supporting the JavaServer Faces technology in their tools, thus promoting the adoption of the JavaServer Faces technology standard.

This is a model of obfuscation; a thing of beauty, in its own way. In nearly a hundred words, it says almost nothing. It contrives to use the name “JavaServer Faces” no fewer than five times, without once giving more than the vaguest hint of what it actually is—something to do with user interfaces, apparently, though whether on the Web or the desktop or elsewhere I couldn’t say. It does tell you a whole bunch of stuff that you might just become interested in after a year or two of actually working with a technology, but which no rational being approaching it for the first time could possibly care about. That several respected tools vendors were members of the JSR-314 expert group was not top of my Things To Find Out About JSF list.

(Let me say that my point here is not to pick on JSF particularly. It is merely one of hundreds of equally dense examples I could have picked—and that, really, is precisely my point.)

I suggest that any library whose key features can’t be summarized in one page of A4 (or US Letter, if you insist) is too complex and needs to be redesigned. By “too complex” here, I don’t mean merely that it does too much, but that the stuff it does do is not focused enough. In fact you ought to be able to briefly summarize what a library does in a single sentence. And if the sentence begins “It’s a framework for constructing abstractions that can be used to derive models that …”, then you lose.

Prong 2: Minimize the Radius of Comprehension

“Radius of comprehension” is a new term that I am introducing here, because it describes an important concept that I don’t think there is a name for. It is a property of a codebase defined as follows: if you are looking at a given fragment of code, how far away from that bit of the code do you need to have in your mind at that time in order to understand the fragment at hand? It is a sort of a measurement of how good encapsulation is across the whole codebase, although when I say “encapsulation” here, I am using that term in a broad sense that means more than just technical issues such as what proportion of data members are marked private. I’m talking about a human issue here (and therefore, sadly, an all but impossible one to measure, though we know it when we see it).

So for example: when I am reading a codebase that I’m not already familiar with, if I come across an object whose class is called Employee or HashTable or CharacterEncoding, I can be reasonably sure that I understand what that class is meant to be doing, and I can take a good guess at the meanings of methods invoked on it—the radius of comprehension is pleasantly low. If I come across objects of class EmployeeFactory, I can probably take a good guess and likely be about right. If I find a CharacterEncodingMediatorFrobnicationDecorator, there is no way I am going to have a clear idea what that’s about unless I go and read the code for myself, so the radius of comprehension grows. Class naming is only one of many factors that contribute to the radius of comprehension: others include immutability of objects, functions that are guaranteed free of side-effects and—the hardest to quantify—the conceptual unity of the various modules.

The significance of the radius is obvious: when it is low, we need load only a little of the code into our minds at once in order to understand the part we’re trying to read, debug or enhance. When the radius is high, valuable mindspace has to be given over to matters that are not directly relevant to the problem we’re trying to solve; and the more things we have to think about at once, the less attention we can give to the core issue.

The radius of comprehension is all about how much you have to hold in your head before you can start being productive. Joel Spolsky has written about the problem of interruptions—how they knock programmers out of “the zone” so that we have to reload all our mental state again before we can get back to useful work (see point 8, “Do programmers have quiet working conditions?”) This being so, we need to build our software in such a way that programmers have a minimum amount to reload after each interruption. And this is particularly important for designers of libraries and (where possible) frameworks.

How can we reduce the radius? In general terms, it’s just Occam’s razor: do not multiply entities without necessity. Each library should provide the smallest possible number of API classes, and each should support the minimum number of methods. This much is obvious. But can we go beyond these generalities to propose some tentative guidelines for reducing the radius? We might try the following, with appropriate humility, in the hope that criticism of them will lead to better rules:

- Each kind of thing should have only one representation. This seems so obvious as scarcely to need stating, but Java’s misguided distinction between Array and ArrayList makes it a point worth stating.

- So far as possible, objects should represent actual things. Sometimes design patterns introduce classes to represent abstractions like iterations, strategies and decorators: that may be a necessary evil, but it’s an evil nevertheless. Every such class increases the radius.

- Immutable objects are easier to think about than mutable. If an object is guaranteed immutable, then the likelihood that I will need to read its source-code to understand it is greatly reduced.

- Similarly, functions without side-effects are easier to think about than those that change the state of one or more objects.

When languages have syntax that allows us to mark objects as immutable or functions as free of side-effects, we should use them: such notation is documentation that the compiler can check for us. The absence of such facilities from extremely dynamic languages such as Ruby is a weakness.

Finally, as Bjarne Stroustrup noted in The C++ Programming Language (2nd edition), “Design and programming are human activities; forget that and all is lost… There are no ‘cookbook’ methods that can replace intelligence, experience and good taste.” I think that the radius of comprehension is a useful concept, but that it will serve us best if we just have it mind when designing APIs, rather than blindly following rules that are intended to reduce it.

Earlier in my career, I spent ten years working on a proprietary text-and-objects database, written entirely in C, that had to build its object orientation out of spare parts. By all standard expectations, it should have been nightmarish. But in fact the code had a very low radius of comprehension, simply because it was designed with care, attention and taste by a very talented and diligent architect. To work on that very large system, I had to have only a very small part of it in my mind at a time. I can only hope that in ten years’ time, people who’ve worked with me are able to say the same about my code.

Mike Taylor started programming on a PET 2001 in 1980, and sold his first commercial programs, for the VIC-20 two years later, at the age of 14. At Warwick University in 1988, he wrote MUNDI, the first MUD to run over the Internet. He works for Index Data, the world’s smallest multinational, for whom his free software releases include query parsers, an OpenURL resolver, and toolkits for interrogating remote IR servers. In his spare time, he is a dinosaur paleontologist: he described and named the sauropod Xenoposeidon in 2007 and revised Brachiosaurus in 2009. His new programming blog, The Reinvigorated Programmer, joins the perennial favorite Sauropod Vertebra Picture of the Week, or SV-POW! for short. Mike’s written a lot of Perl software, but has recently got better and now writes mostly Ruby. One day, he really, really will learn Lisp.

-

Marketing Tips2 days ago

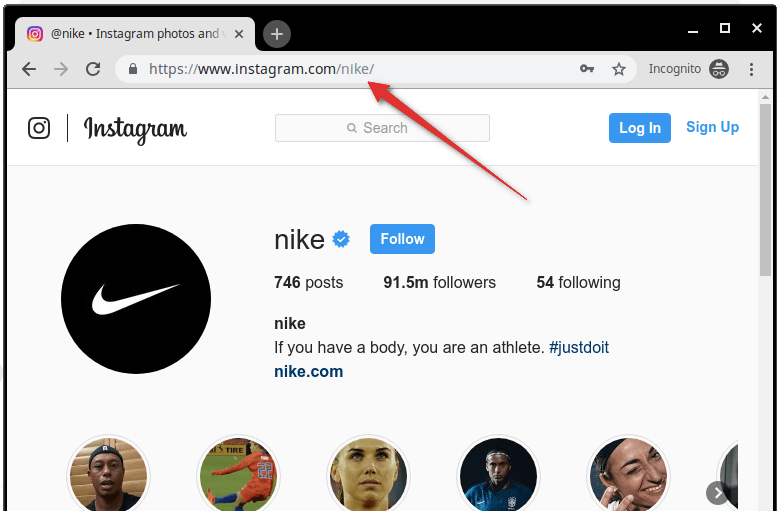

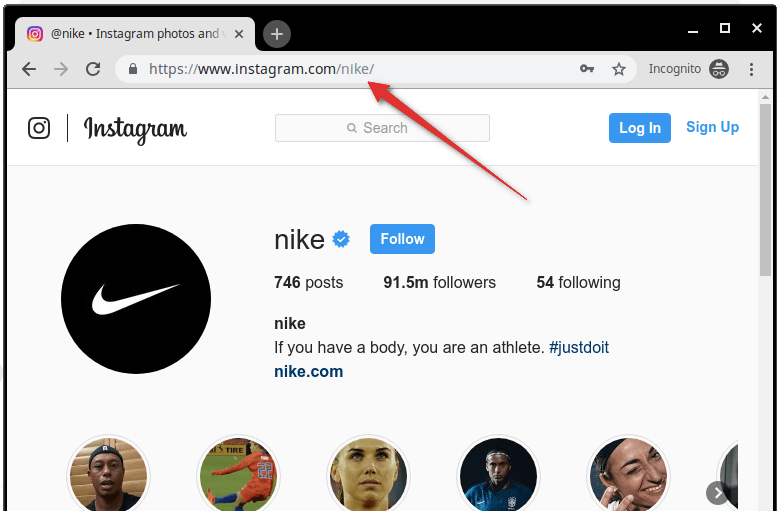

Marketing Tips2 days agoWhat is my Instagram URL? How to Find & Copy Address [Guide on Desktop or Mobile]

-

Business Imprint3 days ago

Business Imprint3 days agoAbout Apple Employee and Friends&Family Discount in 2024

-

App Development3 days ago

App Development3 days agoHow to Unlist your Phone Number from GetContact

-

News4 days ago

News4 days agoOpen-Source GPT-3/4 LLM Alternatives to Try in 2024

-

Crawling and Scraping4 days ago

Crawling and Scraping4 days agoComparison of Open Source Web Crawlers for Data Mining and Web Scraping: Pros&Cons

-

Grow Your Business2 days ago

Grow Your Business2 days agoBest Instagram-like Apps and their Features

-

Grow Your Business4 days ago

Grow Your Business4 days agoHow to Become a Prompt Engineer in 2024

-

Marketing Tips2 days ago

B2B Instagram Statistics in 2024