I make the TOP-list of iOS and Android Augmented Reality SDK intended to shape your AR development (Free and Paid).

It has been a year since the first news of a global pandemic started hitting us on a day-to-day basis. Our entire lifestyle has undergone a...

WWDC 2020 took place a month ago but the whole world is still discussing the innovations presented by Apple. Needless to say that thousands of iPhone...

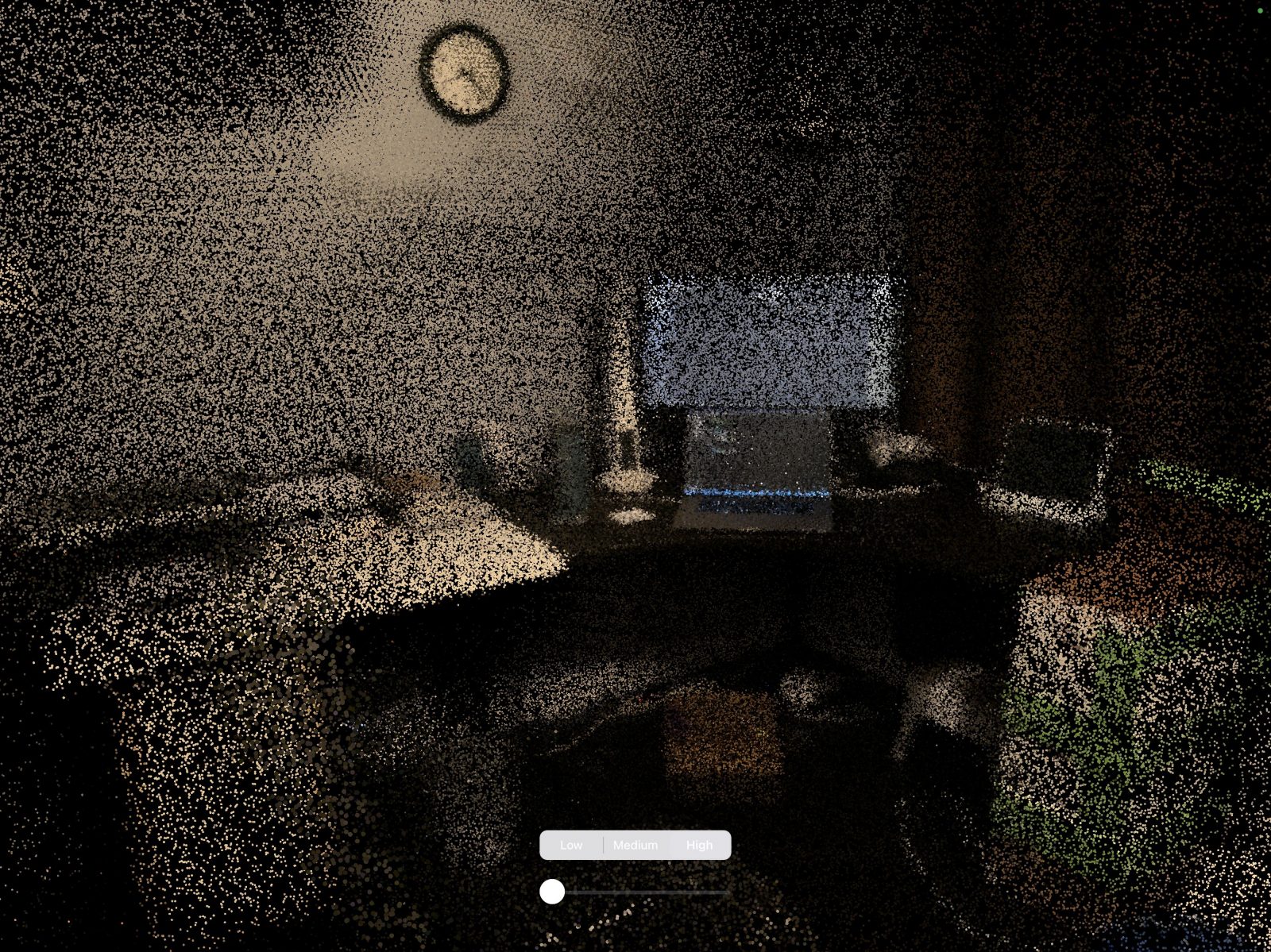

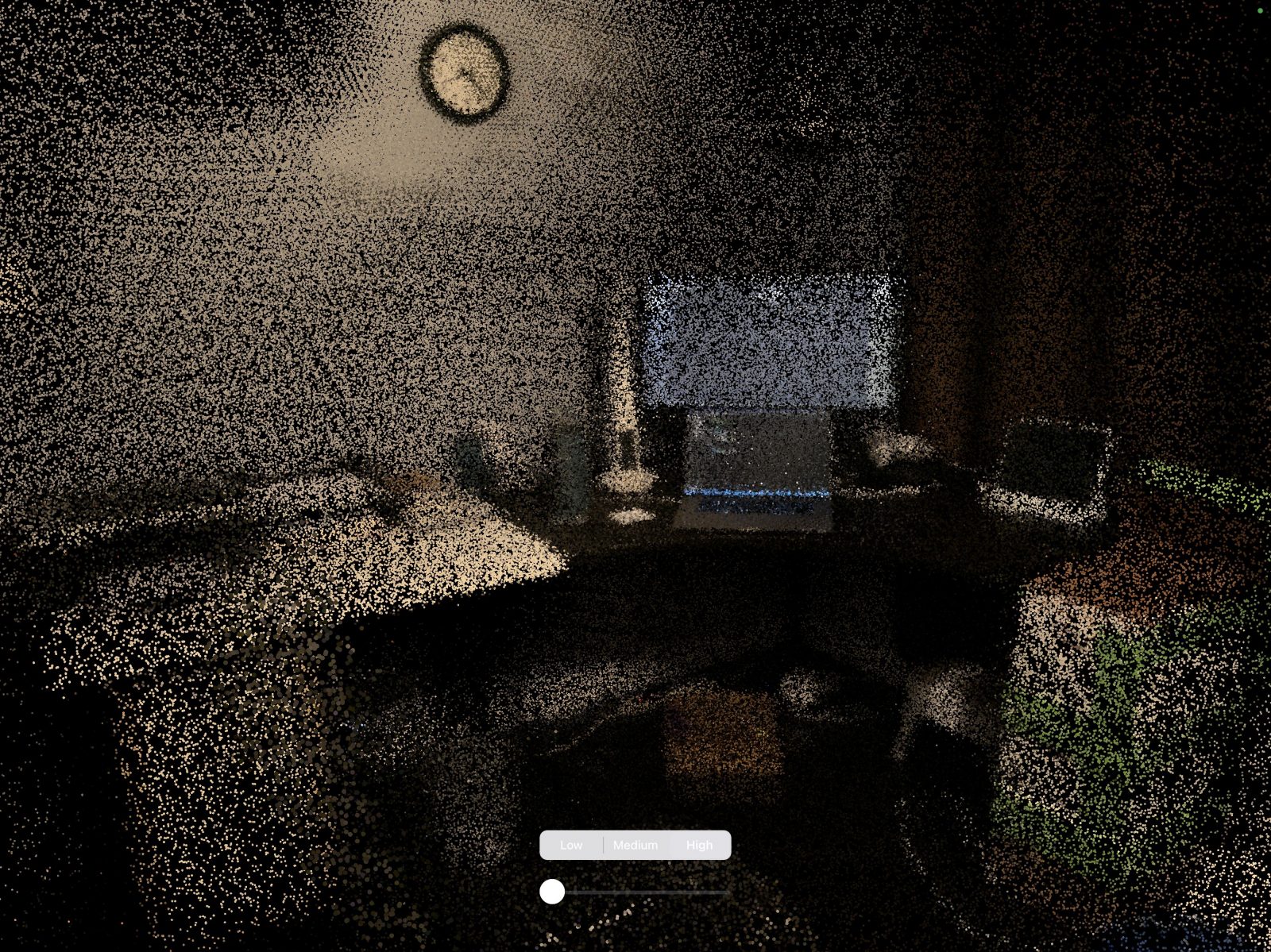

Earlier this year, Apple launched the 2020 iPad Pro with a transformative technology: LiDAR. They simultaneously released ARKit 3.5, which added LiDAR support to iOS. Just...

I have a project idea that I want to develop as a website and an app (iOS and Android). Useful Directory Listing Websites and Mobile Apps...

Recent Comments