App Development

LiDAR or not? ARKit 4 + iOS 14

Earlier this year, Apple launched the 2020 iPad Pro with a transformative technology: LiDAR. They simultaneously released ARKit 3.5, which added LiDAR support to iOS. Just 3 months later, alongside the reveal of iOS 14, they unveiled ARKit 4, which showed a massive leap forward in LiDAR capabilities throughout ARKit and cemented Apple’s commitment to the technology. Now, with rumors of a new LiDAR-enabled iPhone 12 around the corner, you may be wondering: should I care?

This article will answer that exact question by evaluating the use cases of LiDAR and comparing its effectiveness to the deep learning/computer vision models ever-present in our portable devices today.

Background

Hardware Used

- iPad Pro 12.9 4th gen [LiDAR, A12Z CPU with Neural Engine][1]

- iPad Mini 5th gen [No LiDAR, A12 CPU with Neural Engine][2]

These two devices were great comparison devices because they both have the same CPU architecture and neural engine, which should result in machine learning models running nearly identically on the two devices. The only difference for non-CPU-demanding AR applications should be the LiDAR sensor.

LiDAR Data Available

In ARKit 3.5, Apple augmented existing AR features like Raycasting, Motion Capture, and People Occlusion with LiDAR data to improve their effectiveness. They also provided a couple of brand new use cases of LiDAR such as illuminating real-world surfaces with virtual lighting and allowing virtual objects to collide with real-world surfaces [3].

ARKit 4, in addition to some more improvements on those features, Apple introduced the Scene geometry API, which returns a mesh of the surrounding environment, and the Depth API, which provides the depth, in meters from the camera, of each pixel on each frame at 60 fps, along with confidences for each of these values [4].

Now, let’s see if this data is any good!

Comparison

Instant AR Placement

Virtual object placement works in iOS by first identifying the properties of a surface, e.g. the plane vectors, and then placing the virtual object on it. Surfaces could be horizontal, vertical, a face, an image (i.e. a book or poster), or an object. You could explore these surface options, along with ARKit in general, in Apple’s Reality Composer [5].

On iOS devices without a LiDAR sensor, prior to placing an object on a surface, the user is prompted to move around a bit to help the machine learning models get a better understanding of the surfaces. This makes for a slow and annoying experience for users when trying to place an object on a surface. This movement is required because the cameras need to get an accurate perception of depth before being able to place a virtual object in the real world accurately and having various camera angles of the room allows the machine learning models to do a better job [6]. This process is called onboarding, and it’s something LiDAR improves upon significantly.

With LiDAR, these depth estimates happen almost instantly, allowing users to place objects as soon as a plane is visible. This could be tested by using ARKit 4’s object placement, which will automatically use LiDAR if the device supports it. In low light, the effect is especially pronounced. Below are two videos I shot running Apple’s Measure app on the iPad in a low light environment.

LiDAR-enabled iPad: https://youtu.be/6qDCBBi4NV8

Non-LiDAR iPad: https://youtu.be/rGhIlLSaKOk

As shown in the videos, the LiDAR experience is a lot simpler for the user and allows for object placement in adverse conditions such as low light.

People Occlusion

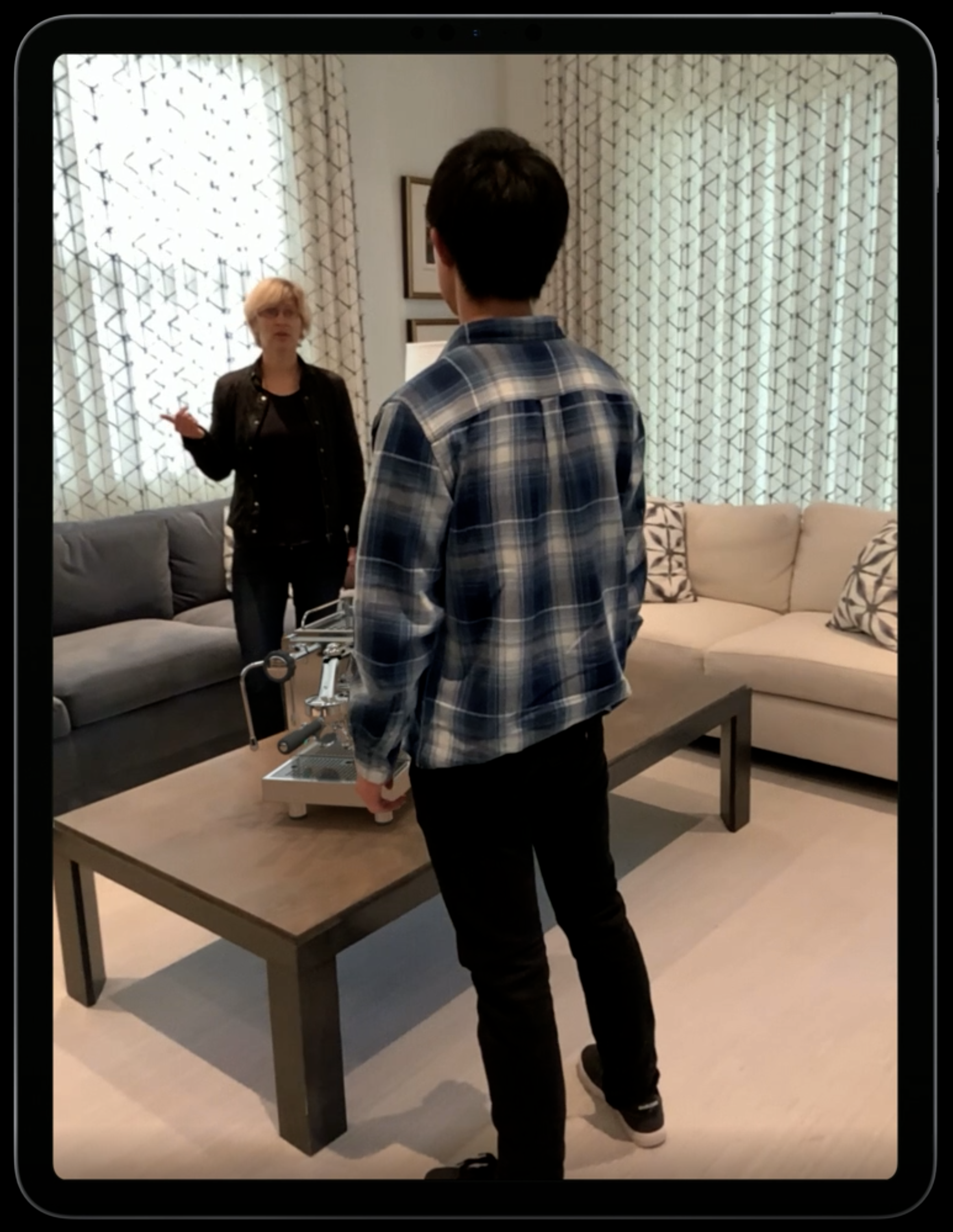

Apple unveiled People Occlusion [7] in ARKit 3/iOS 13, which allowed AR objects to be placed and rendered correctly as people passed in front of the object in the camera frame. You could see its effects in the comparison below:

Now how does this work? Apple explains the magic in the ARKit 3 Introduction Video [8]: 1) a machine learning model figures out which pixels are people. 2) a second machine learning model estimates the depth of each person, determining whether it should be covering the AR object or covered by the AR object. 3) using this data, the layers are drawn in the correct order.

Analyzing step 2 however, it’s quickly apparent that a) this is quite a difficult task for a machine learning model and b) this all or nothing approach to determine if a person is in front of the object doesn’t work in cases where the half of the person is behind the object and the other half isn’t. Running ARKit 3 on this situation yielded:

Instead of estimating the distance using machine learning, the machine learning model in step 2 of the People Occlusion section above could be replaced with a LiDAR sensor, which will actually tell us how far each pixel is from the camera. This data, which comes in the form of a depth map (explored below), could be used to determine exactly which parts of the camera image should be in front/behind the AR object. This is exactly what Apple did in ARKit 4, enabling much more accurate People Occlusion compared to just a camera. Or so Apple tells us. From my testing, Apple’s current implementation doesn’t work in many circumstances, and about half the time, I got results like below, even with the $1000 iPad Pro and its LiDAR sensor:

Overall, this means that while LiDAR does improve on camera-only depth perception, either it’s not perfect or Apple’s software still needs improvements to get this exactly right.

Occlusion behind real-world objects

As explained in the people occlusion section above, non-LiDAR devices achieve the occlusion effect via a machine learning model that identifies people and then estimates their distance from the camera. LiDAR devices, on the other hand, don’t need the knowledge that they are people at all, they just know how far each pixel is from the camera (as explained in the Background section), and on a per-pixel level can determine if it should be occluded by, or occlude the virtual object.

This much more fine-grained level of detail allows LiDAR-equipped devices to have virtual objects be occluded by any real-world surfaces or objects and also easily handle cases where you see only certain parts of the virtual object. This works well in many cases. However, I was able to reproduce very obvious flaws with the implementation quite easily. For example, here’s a virtual vase cleary behind an object:

And here’s the iPad not occluding the object at all, even though it should.

Clearly there is still work to do.

Cost

LiDAR sensors are expensive. So, the tech is only currently available in the 2020 iPad Pros, which start at $800 USD and only increase in price if you want a larger screen or more than 128 GB of storage. This means that LiDAR is less likely to up in lower-tier phones and in turn, many, if not most people won’t be carrying around a LiDAR device for the foreseeable future.

This means that if you are developing something using some of the LiDAR specific features mentioned in the new features section below, expect only a fraction of the iOS device ecosystem to be able to use it.

However, on the flip side, now that LiDAR devices are coming, if you decide to build things with the rest of ARKit (all of the features mentioned above), then not only will these features run on the majority of iOS devices, but they’ll also get better over time for your users as they migrate to LiDAR-enabled devices, all without any extra code on your part. This makes AR development all the more attractive right now!

Other Considerations

Deep learning models for computer vision are getting better every day [9]. Thousands, if not millions of developers around the world are working on improving them. As this technology improves and Apple adopts new methodologies and technologies, you’ll start to see the LiDAR-less AR experience improve on all supported devices. This means that while LiDAR has a lead on distance perception right now, the lead is getting smaller every day and one day, maybe it might be just as accurate (if not more so). Elon Musk sure thinks so.

Then, the time spent on building LiDAR-specific experiences may actually be an investment for the short-to-medium term, just for while computer vision plays catch up. Granted, this quick improvement of computer vision is just a speculation, that even if it happens, will take several years at the very least.

Another limitation is the 5m range of the LiDAR sensors in the iPad Pros. This means that a) your experiences can’t place objects (or use the LiDAR sensor in any way) greater than 5 meters away with from the user and b) even for ranges within the 5m, LiDAR points are quite sparse as you get further from the device, which prevents very precise data from being available at further distances. For example, in the Depth Map section below, I show a diagram of a point cloud which illustrates the lack of density problem when the points of interest are 2 meters from the user.

New Features Enabled by LiDAR

Scene Geometry

Lets a user create a topological map of their space with labels identifying floors, walls, etc. Companies like canvas.io [10] are already using this feature to enable mapping of rooms/buildings using just the LiDAR scanner on the 2020 iPad Pro.

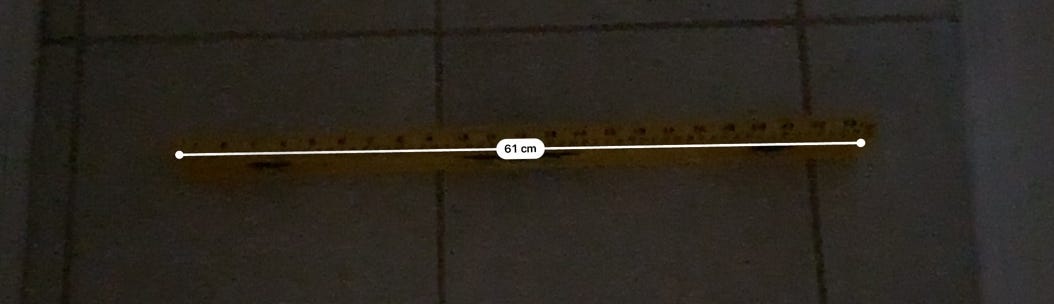

Canvas.io claims that by scanning your house with the LiDAR scanner, the output 3d model will have an error of only up to 1–2%! This is a far cry more accurate than the measurements taken from non-LiDAR devices. I tried using Apple’s Measure app to “measure” the length of a tape measure and LiDAR scans proved to be several times more accurate in darker conditions. However, in well-lit conditions, camera-only measuring worked almost flawlessly as well (down to the centimeter, at least).

Note: even though it looks like the picture is darker in the iPad Mini shot, the two pictures were taken in the exact same light. This may have to do with the cameras on the iPad Pro being better at brightening up low-light images.

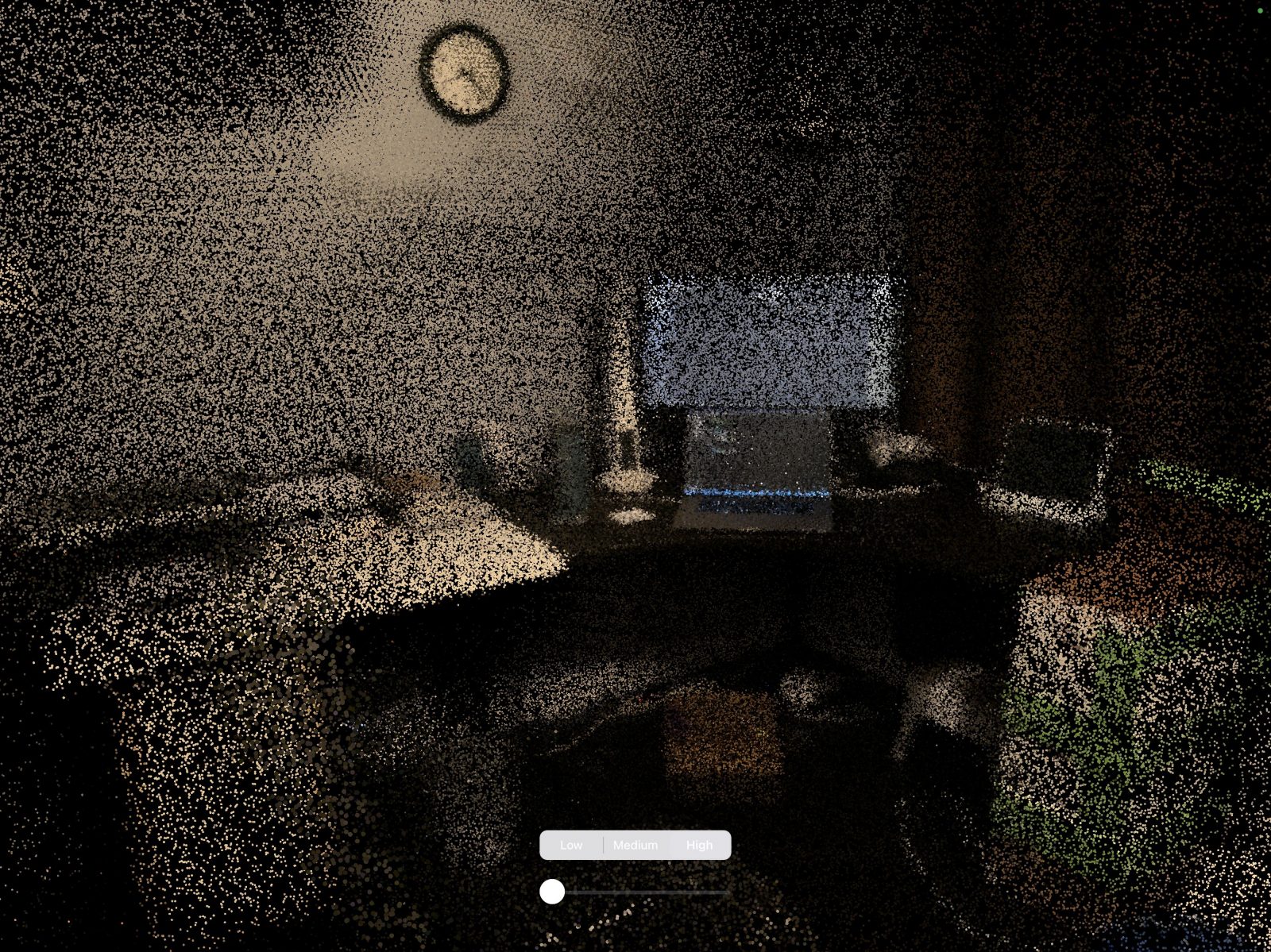

Depth Map

ARKit 4 gives applications access to a depth map on every AR frame. The depth map provides a mapping from each pixel in the AR frame to the distance from the camera that pixel is. You could feed this depth map, in combination with data from the camera about angles and colors into a Metal vertex shader that unprojects and colours the individual LiDAR points onto the 3D space. Then, by saving the points of each frame onto a persistent 3D state, we end up with a point cloud, which could then be rendered using Metal. Here’s the result of running this code on a 2020 iPad Pro:

The varying densities of points are caused by how close I got to the cluster of points with the LiDAR sensor. Looking at the top left of the image, you could see that the points aren’t very dense, since I didn’t get very close to them (about 2 meters away). On the other hand, looking near the center of the image, you could almost see individual keyboard keys, along with the backlighting of my laptop’s keyboard. To achieve this precision I passed over my laptop with the LiDAR sensor at a 10 cm distance from my laptop (any close distance should achieve similar results).

Other Features

In addition to Scene Geometry and the Depth API, LiDAR also enables a couple of other features such as allowing virtual light sources to illuminate physical surfaces. This allows for a far more realistic experience in AR apps. Further, the precise scene geometry allows virtual objects to collide with real-world objects and surfaces, enabling many complex experiences mixing the real and physical worlds [3].

Summary

As shown in the comparison and discussion of new features, LiDAR brings many useful capabilities that could enable experiences that weren’t possible before, along with improving already existing experiences. The instant object placement and vastly superior occlusion are just two examples that show LiDARs clear advantages.

On the flip side, the added cost of LiDAR sensors was also discussed, which limits the possibility of mass adoption at every price point. Further, we talked about the ever-improving computer vision capabilities of our phone’s cameras and how they may catch up to LiDAR’s depth estimation eventually.

Then, let’s break this down into two kinds of experiences:

- For experiences that benefit from LiDAR, but down require much additional development effort (such as object placement and object occlusion), it’s definitely worth adding the configuration to your app to make the experience significantly better for your LiDAR-enabled users.

- For experiences that only support LiDAR, such as building a 3-D model of your house using the Depth API, you should consider that the adoption of LiDAR might not be super widespread and it may be limited to only expensive “Pro” models of iPhones and iPads which usually aren’t the most popular models. Further, many of these LiDAR features aren’t fully baked yet, so you can’t depend on them working perfectly. If these problems are okay, then LiDAR might enable you to build something that wasn’t possible before!

Overall, LiDAR is an extremely powerful technology and I hope you use it to build something awesome!

References

[1] https://www.apple.com/ipad-pro/

[2] https://www.apple.com/ipad-mini/

[3] https://developer.apple.com/news/?id=03242020a

[4] https://developer.apple.com/news/?id=1u5zg8ak

[5] https://developer.apple.com/augmented-reality/reality-composer/

[7] https://developer.apple.com/documentation/arkit/occluding_virtual_content_with_people

[8] https://developer.apple.com/videos/play/wwdc2019/604/

[9] https://medium.com/@ODSC/the-most-influential-deep-learning-research-of-2019-21936596fb4d

[10] https://canvas.io/

-

Business Imprint9 hours ago

Business Imprint9 hours agoAbout Apple Employee and Friends&Family Discount in 2024

-

App Development4 hours ago

App Development4 hours agoHow to Unlist your Phone Number from GetContact

-

News1 day ago

News1 day agoOpen-Source GPT-3/4 LLM Alternatives to Try in 2024

-

Crawling and Scraping1 day ago

Crawling and Scraping1 day agoComparison of Open Source Web Crawlers for Data Mining and Web Scraping: Pros&Cons

-

Grow Your Business1 day ago

Grow Your Business1 day agoHow to Become a Prompt Engineer in 2024

-

Software Development1 day ago

Software Development1 day agoWhy is the Julia Programming Language Popular in 2024?

-

Business Imprint1 day ago

How the Digital Yuan Is Reshaping Consumer Banking Behaviors

-

Business Imprint1 day ago

Business Imprint1 day agoThe Future of Finance: Investing in Bitcoin for Long-Term Wealth